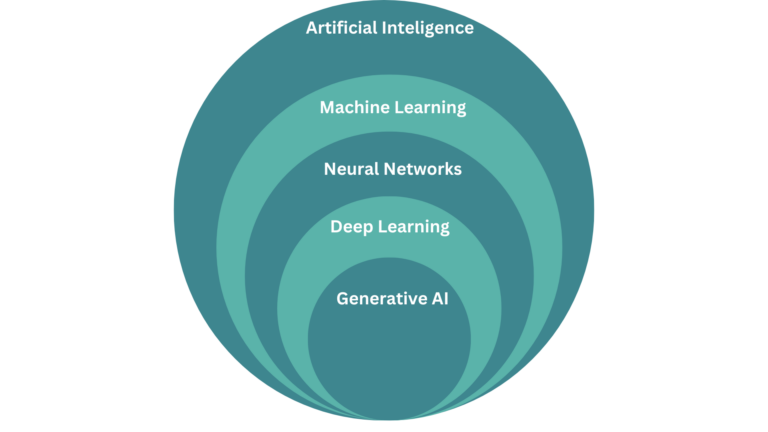

AI has been around since the 1950s, but only recently has it expanded at lightning speed, becoming an integral part of our daily lives. Confused by all the AI jargon? You’re not alone! This blog unravels these buzzwords and explores the exciting layers of Artificial Intelligence. From the broad scope of AI to the creative potential of Generative AI, this blog breaks down each concept in a fun and easy-to-understand way.

Understanding the Basics of Artificial Intelligence

At the outermost layer, we have Artificial Intelligence (AI). What does it mean? AI refers to systems and machines designed to mimic human intelligence and perform tasks that typically require human cognitive functions. Think of Siri and Alexa, which – like human personal assistants – use AI to understand and respond to your commands, or algorithms on Netflix that – like a friend who recommends something you should watch on TV – suggest shows based on your viewing history.

One of the key areas of AI is Natural Language Processing (NLP), which enables machines to understand and respond to human language. This includes tasks like making meaning out of words organized in a certain way, recognizing speech, translating text between languages, and generating human-like text. For instance, Google Translate uses NLP to convert text from any language to another, and customer service chatbots use NLP to provide responses to queries.

Another interesting area of AI is Computer Vision (CV), which allows machines to interpret and process visual information from the world, such as identifying objects in an image or analyzing video footage. Self-driving cars, for example, rely on computer vision to navigate roads and avoid obstacles.

AI also involves Knowledge Representation, which is the method of storing information about the world in a format that computers can utilize to solve complex problems. This enables systems to sift through vast amounts of data and provide insights in fields ranging from healthcare to finance.

Additionally, AI Ethics is a growing field focused on ensuring that AI systems are developed and deployed responsibly, considering the societal and ethical implications. This includes addressing issues like bias in algorithms and ensuring privacy protection.

Lastly, Cognitive Computing aims to simulate human thought processes in computerized models, striving to create systems that can think, learn, and adapt similarly to humans.

Diving Deeper into Machine Learning

Moving one layer in from the broad umbrella of AI, we find Machine Learning (ML), a fascinating subset where systems learn from data and improve their functionality on their own, as they gain experience. It’s a computer learning from experience, much like we humans do, but without the coffee breaks. ML is about algorithms that can identify patterns in data, make predictions based on their understanding of the data, and improve over time with more data.

One key concept in ML is Dimensionality Reduction, which involves simplifying data without losing significant information. Imagine trying to find a needle in a haystack – dimensionality reduction is like removing the excess hay so the needle becomes easier to find. For example, in facial recognition technology, this technique helps in reducing the complex data of a face image to essential features, making the system faster and more efficient.

Unsupervised Learning is another intriguing aspect of ML, where the system identifies patterns in data without any pre-labeled outcomes. It’s like letting the computer explore a new city without a map, discovering landmarks and neighborhoods on its own. Clustering algorithms, which group similar data points together, are a prime example of unsupervised learning used in market segmentation to identify distinct customer groups.

Lastly, Reinforcement Learning and Ensemble Learning are powerful techniques in ML. Reinforcement Learning is about learning through trial and error. This approach is used in training AI for games like chess and Go. On the other hand, Ensemble Learning involves combining multiple models to improve performance, akin to the saying “two heads are better than one.” By using a group of models to make decisions, systems can achieve better accuracy and robustness.

Exploring the World of Neural Networks

Neural Networks are inspired by the structure of the human brain. These networks are the backbone of many advanced AI capabilities, enabling machines to perform tasks with human-like precision. Neural networks consist of layers of interconnected nodes, or “neurons,” that work together to process information.

At the core of neural networks are Perceptrons, the simplest type of neural network. Imagine them as the basic building blocks or the “Lego bricks” of a neural network. They take input data, perform a simple computation, and produce an output. Such output is then sent to another perceptron, which takes this output as its input and performs another simple computation. While perceptrons are quite basic, they form the foundation for more complex structures.

Moving a step further, we have Convolutional Neural Networks (CNNs), which specialize in processing visual data. These networks excel at tasks like image recognition and classification.

Recurrent Neural Networks (RNNs) are designed to handle sequential data, making them ideal for tasks that involve time series or natural language processing. RNNs are used in applications like language translation and speech recognition, where the context of previous words or data points significantly impacts the current task.

Multi-Layer Perceptrons (MLPs), on the other hand, consist of multiple layers between the input and output, allowing them to model complex relationships in data. These networks are like a series of filters that refine and improve the data as it passes through each layer, used in applications ranging from predicting stock prices to diagnosing diseases.

Key to the functioning of neural networks are Activation Functions and Backpropagation. Activation Functions determine the output of a neural network by introducing non-linearity, allowing the network to solve more complex problems. Backpropagation is the method used for training neural networks, akin to how we learn from our mistakes. It adjusts the weights of the neurons based on the error of the output, gradually improving the network’s accuracy. This technique is crucial for tasks like handwriting recognition and generating realistic human-like text with models such as ChatGPT.

And don’t let the fancy terms scare you—they’re just clever names for how these systems learn and improve!

Unveiling the Depths of Deep Learning

Within the world of Machine Learning and Neural Networks lies the realm of Deep Learning, a subset characterized by networks with many layers, hence the term “deep.” These multi-layered networks are capable of learning and modeling complex patterns in vast amounts of data, making them exceptionally powerful.

Deep Neural Networks (DNNs) are at the core of deep learning. These networks consist of multiple hidden layers between the input and output layers, allowing them to model intricate relationships in data. The hidden layers process information step-by-step, and only the final result is visible, like a magic trick where you see the outcome but not the intricate steps involved. For example, DNNs are used in applications like voice assistants, which understand and respond to human speech, and in medical diagnostics, where they help identify diseases from medical images with high accuracy.

Generative Adversarial Networks (GANs) are another fascinating aspect of deep learning. GANs consist of two networks—a generator and a discriminator—that work together to generate new data similar to the input data. The generator creates realistic images, while the discriminator evaluates them. This adversarial process results in remarkably realistic outputs, such as deepfake videos or AI-generated artwork.

Deep Reinforcement Learning (DRL) combines deep learning with reinforcement learning, enabling systems to learn optimal actions through trial and error. This approach is used in advanced robotics, where robots learn to navigate complex environments. By leveraging deep learning’s ability to process large amounts of data, DRL can tackle tasks that require both perception and decision-making.

The Innovative World of Generative AI

The recent advancements of AI are in the field of Generative AI, creating new content using AI models. Generative AI powers applications that can produce texts, images, video, music, and other creative content, pushing the boundaries of what machines can create. Gen AI technologies leverage advanced AI models to generate content that only humans used to be able to create through hard work, talent, and intelligence, making it a transformative tool in all industries.

Large Language Models (LLMs) are fundamental to Generative AI. LLMs’ talent is essentially the prediction of the next word in a sequence. This is the backbone of applications like autocomplete and text generation. But when a word follows another word, and thousands of words are combined into logical and insightful paragraphs, articles, and books, magic happens. Models like OpenAI’s ChatGPT and Anthropic’s Claude.ai can generate coherent and contextually relevant texts about any given subject, using any imaginable expertise that exists in the world and documented in a way that such models could train on and learn from. We cannot scratch the surface of how ChatGPT is changing the world – if you haven’t tried it yet, please do quickly.

Key to LLM capabilities is the Transformer Architecture, a method designed to handle sequential data efficiently. Transformers use a Self-Attention Mechanism, allowing them to focus on different parts of the input sequence and understand the context better. This architecture is crucial for Natural Language Processing (NLP) tasks, enabling models to comprehend and generate human language with remarkable accuracy.

Generative AI also utilizes Dialogue Systems, capable of engaging in conversations with humans. These systems use Transfer Learning to leverage knowledge from one task and improve performance on another, enhancing their conversational abilities. By continuously learning and adapting, generative AI systems are becoming more sophisticated and human-like in their interactions.

The Turing Test suggested a chat dialogue interface to determine the intelligence of a system. In this test, a human talks to both a machine and another human without knowing which is which. If the human can’t tell them apart, the machine is considered intelligent. This idea has become a key part of understanding and testing artificial intelligence.

Learning AI in Practice with Wawiwa

Wawiwa is a global tech education provider offering reskilling and upskilling programs to various tech and business jobs. Wawiwa reskills people with no background in programming to sought-after jobs as Frontend Developers, Full-Stack Developers, and other tech professions.

In addition to reskilling programs, Wawiwa also offers targeted AI Upskilling Courses designed for programmers looking to enhance their expertise.

For example:

- Practical Data Science for Developers is an 8-hour seminar that equips software developers with an introductory understanding of key data science concepts. It gives essential insights to integrate Machine Learning, Deep Learning, and Generative AI into software development projects.

- Machine Learning for Programmers is an intensive 40-hour course that equips software developers with the skills needed to implement Machine Learning algorithms and solutions effectively. Participants develop essential Python skills, from basic programming to advanced data handling with Pandas and NumPy. Graduates of this course can tackle real-world data science problems using strategies such as handling imbalanced data and ensemble methods.

Both courses are structured to provide hands-on experience, ensuring that participants understand the theoretical underpinnings of the different layers of AI and gain the practical skills necessary to apply these techniques in their projects.

Now Use These Terms in a Sentence!

AI is everywhere and after reading this blog, you’re at least ready to “talk the talk”. So next time you hear someone mention an AI term, don’t turn away shy, blend into the conversation and be part of the discussions that are contemplating how our lives alongside the machines will look like!